VMware Event Broker on Kubernetes with Knative functions - part 1

Overview

As mentioned in some previous posts (here or here), I do not deploy the instance-based packaging of the VMware Event Router: aka VEBA. I prefer to reuse existing Kubernetes cluster(s) to host the vmware event router and the associated functions.

Currently, most of my automation work relies on OpenFaaS® functions, and Argo workflows for long running tasks (triggered by OpenFaaS).

Since v0.5.0 release, the VMware Event Broker, supports a new processor: knative.

This part 1 post will cover the deployment of Knative components, in order to prepare the deployment of VMware Event Broker through helm chart mentioned in part2.

About Knative

Knative is a Google-held Kubernetes-based platform to build, deploy, and manage modern serverless workloads. The project is made of three major components:

- Knative Serving: Easily manage stateless services on Kubernetes by reducing the developer effort required for auto-scaling, networking, and rollouts.

- Knative Eventing: Easily route events between on-cluster and off-cluster components by exposing event routing as configuration rather than embedded in code.

Some major serverless* cloud services are now based or compatible with knative API like Red Hat OpenShift Serverless or Google Cloud Run.

The Knative Eventing provide an abstraction of the messaging layer supporting multiple and pluggable event sources. Multiple delivery modes are also supported (fanout, direct) and enable a variety of usages. Here is an overview of events way within the Eventing component:

Broker Trigger Diagram (src: https://knative.dev/docs/eventing/)

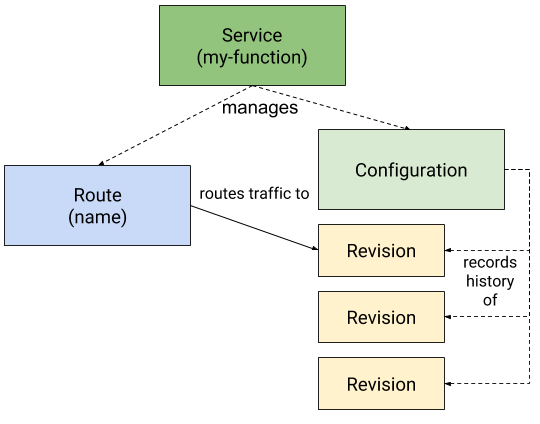

The Knative Serving project provides middleware primitives that enable the deployment of serverless containers with automatic scaling (up and down to zero). The component is in charge of traffic routing to deployed application and to manage versioning, rollbacks, load-testing etc.

Knative service overview (src: https://knative.dev/docs/serving/)

Deploy Knative on your cluster

Setup description

In the following setup, we will deploy Serving and Eventing components with Kourier as Ingress for Knative Serving.

Kourier is a lightweight alternative for the Istio ingress as its deployment consists only of an Envoy proxy and a control plane for it.

I assume that you already have a working Kubernetes cluster.

If not, you can try

kindto deploy a local, dev-purpose, cluster.

The following process also relies on helm to deploy the vmware event router.

We will use the (current) latest version of knative, but you can probably change the value of the following setting according to the knative latest available release.

1export KN_VERSION="v0.22.0"

Knative Serving

Knative documentation is really helpful for the following steps. I will only put together the commands I used in my setup:

1# custom resources definitions

2kubectl apply -f https://github.com/knative/serving/releases/download/${KN_VERSION}/serving-crds.yaml

3

4# Knative Serving core components

5kubectl apply -f https://github.com/knative/serving/releases/download/${KN_VERSION}/serving-core.yaml

A new knative-serving namespace will be deployed on the cluster with some core resources.

Then we install and configure Kourier to act as our Ingress controller:

1# Install and configure Kourier

2kubectl apply -f https://raw.githubusercontent.com/knative/serving/${KN_VERSION}/third_party/kourier-latest/kourier.yaml

3

4# Specfiy knative Serving to use Kourier

5kubectl patch configmap/config-network --namespace knative-serving --type merge --patch '{"data":{"ingress.class":"kourier.ingress.networking.knative.dev"}}'

Depending on the target platform you use, you may, or may not have a value already set for the External-IP of the kourier service.

1kubectl -n kourier-system get service kourier

2# Output

3NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

4kourier LoadBalancer 10.43.165.137 pending 80:30471/TCP,443:32405/TCP 10m

If you have a pending value (like in my on-premise setup), you can manually assign an IP address to the service:

1kubectl patch service kourier -p '{"spec": {"type": "LoadBalancer", "externalIPs":["192.168.1.36"]}}' -n kourier-system

2

3kubectl -n kourier-system get service kourier

4# Output

5NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

6kourier LoadBalancer 10.43.165.137 192.168.1.36 80:30471/TCP,443:32405/TCP 10m

You can have a quick look at running pods on your `` namespace to see if everything is running fine:

1kubectl get pods -n knative-serving

2# Output

3NAME READY STATUS RESTARTS AGE

43scale-kourier-control-67c86f4f69-6mnwr 1/1 Running 0 11m

5activator-799bbf59dc-s6vls 1/1 Running 0 11m

6autoscaler-75895c6c95-mbnqw 1/1 Running 0 11m

7controller-57956677cf-74hp9 1/1 Running 0 11m

8webhook-ff79fddb7-gjvwq 1/1 Running 0 11m

Knative Eventing

As for the Serving component, you can rely on a very clear documentation to install the Eventing component.

1# custom resources definitions

2kubectl apply -f https://github.com/knative/eventing/releases/download/${KN_VERSION}/eventing-crds.yaml

3

4# Knative Eventing core components

5kubectl apply -f https://github.com/knative/eventing/releases/download/${KN_VERSION}/eventing-core.yaml

6

7# Prepare In-memory channel (messaging) layer

8kubectl apply -f https://github.com/knative/eventing/releases/download/${KN_VERSION}/in-memory-channel.yaml

9

10# Prepare MT-channel based broker

11kubectl apply -f https://github.com/knative/eventing/releases/download/v0.22.0/mt-channel-broker.yaml

Channels are Kubernetes custom resources that define a single event forwarding and persistence layer. [More details]

Brokers can be used in combination with subscriptions and triggers to deliver events from an event source to an event sink.

Here, the default MT Channel Based Broker relies on a default, unsuitable for production, In-Memory channel.

We will only use the clusterDefault settings but, if needed, you can edit the broker configuration by using the next command:

1kubectl edit cm -n knative-eventing config-br-defaults

knative CLI

Event if kubectl could be used to manage knative components, a kn CLI tool is also available with completion of otherwise complex procedures such as auto-scaling and traffic splitting.

1curl https://github.com/knative/client/releases/download/${KN_VERSION}/kn-linux-amd64 -L > kn

2chmod +x kn

3sudo mv kn /usr/local/bin/kn

4# Test it

5kn version

Part 2: Deploy VMware Event Broker

See you in Part 2 to deploy the VMware Event Broker and some functions.

Credits

Title photo by Jonathan Kemper on Unsplash